Well, after three days of work, I finally have a working lightmap renderer… I’m not very happy with the results, to be honest, but there’s still some places for improvement… to go further, I need a real example to work with, with some moving characters to see how enabling/disabling lights will work and the effect of having a priority queue for the light updates (so I can have stuff that only updates once every four or five frames)… more on this below…

Anyway, as I said in my previous post, I’m using the UV-unwraper that comes with D3DX, since this is just for a preprocess tool… the results are quite nice, but I had to tweak it a bit…

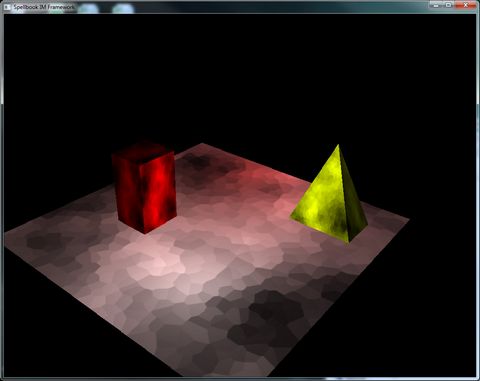

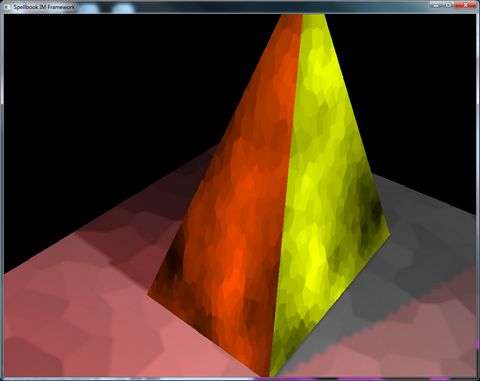

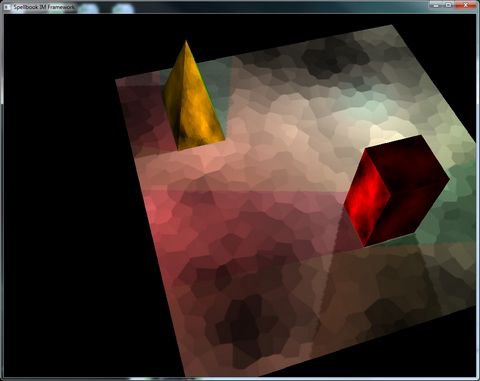

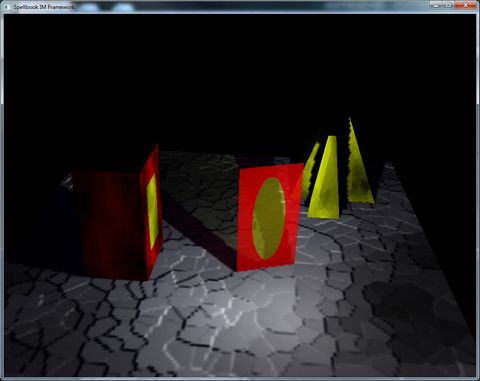

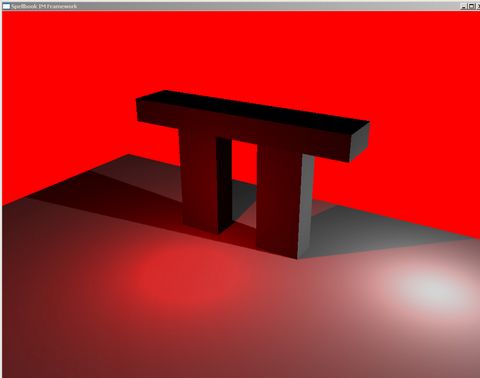

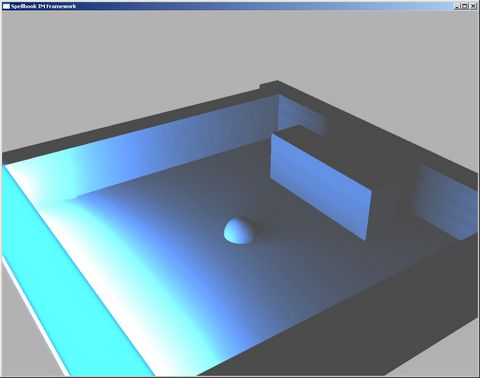

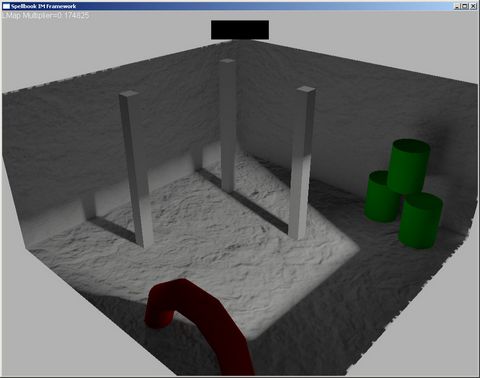

This was my first test scene, renderer without shadowmaps in my normal deferred renderer… There’s only diffuse color maps in the objects.

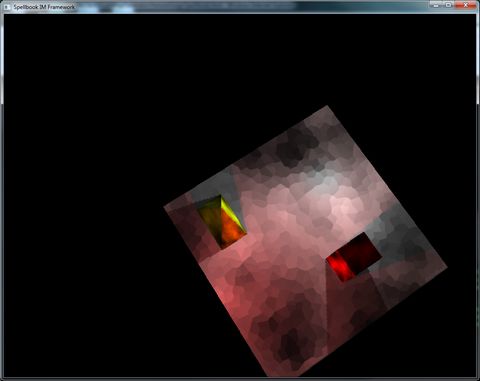

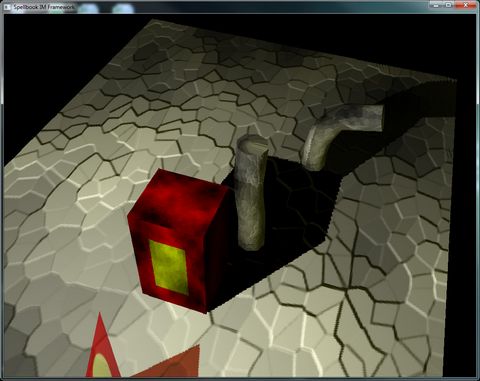

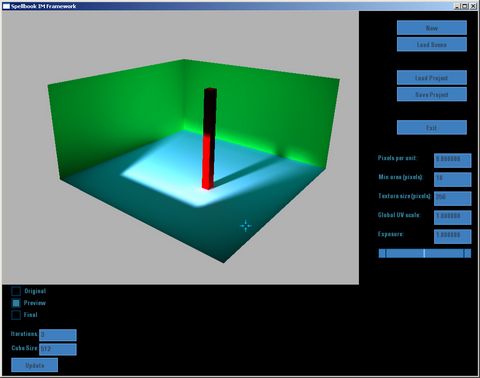

This is the first iteration of the lightmap (rendered in 6 seconds in debug mode, a single 256×256 map).

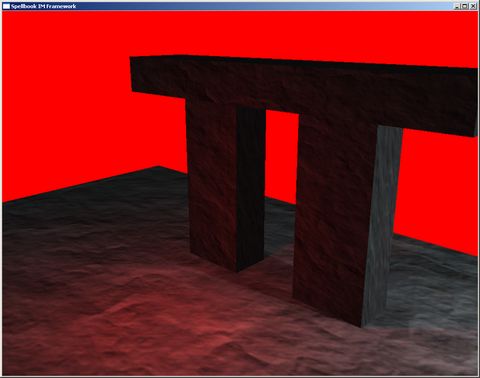

A closer look shows some edge artifacts… This got solved by simply doing a "bleed" effect on the generated lightmap.

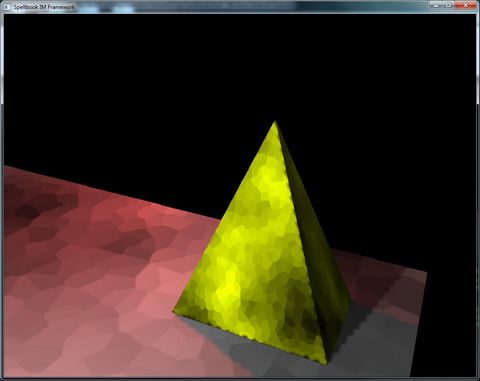

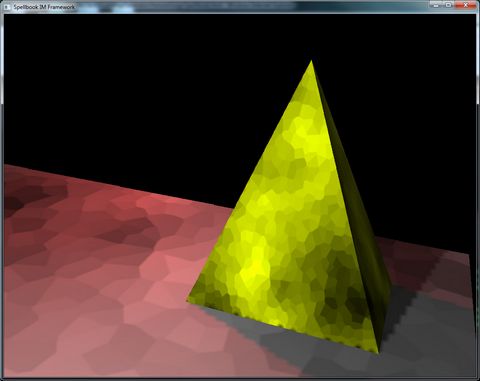

The black spots in the bottom of the pyramid are due to sampling artifacts (since it’s at the same height as the plane, so it intersects). This can be solved through multisampling (on my wish list).

Here I had a big error (didn’t take a screenshot), where the orange and the yellow would fight, since that edge was shared in the lightmap. Fixing this was just a matter of tweaking the UV generation so that that edge wouldn’t be shared; so I added code that took in consideration that triangles whose normals differed more than 30 degrees wouldn’t be considered adjacent, and the results were good.

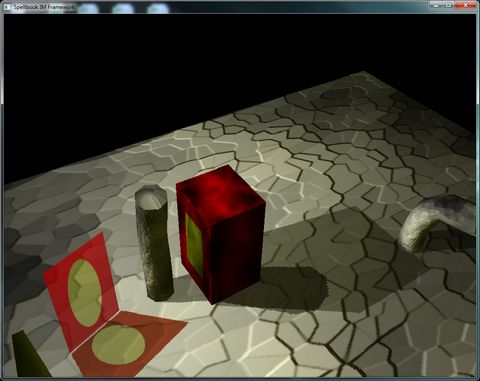

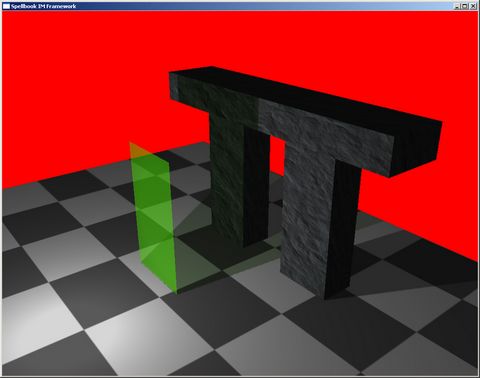

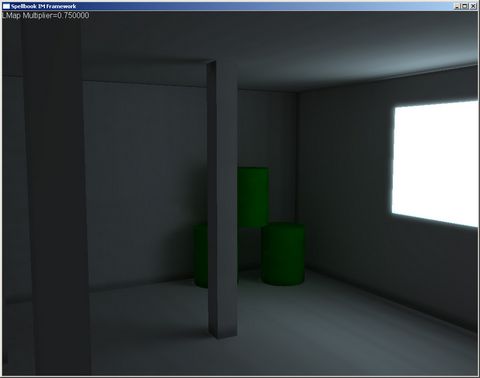

Mixing static and dynamic lights worked well. The red shadow cast by the red block is the result of a light circling the scene. Note that the edges are much more defined.

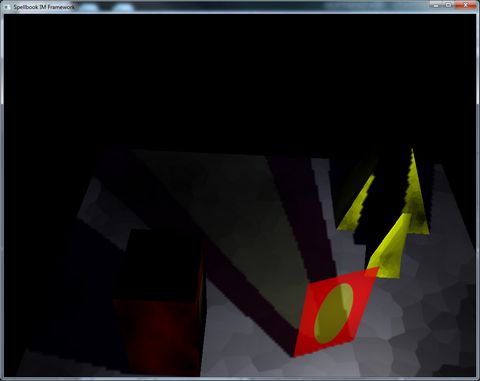

Adding the stained-window effect was pretty easy with the current system, and I love it, to be honest… 🙂

Adding normal mapping was also pretty easy, but problems become evident when we zoom in:

The lack of resolution of the lightmap shows pretty obviously… I think this can be solved through multisampling, or just doing a better sampling of the normal map to consider the resolution… But it’s pretty obvious that normal maps won’t work well in lightmaps, since lightmaps are good for low-frequency lighting, and normal maps add high-frequency data to it… There’s other solutions for this, like what Valve did in the Source engine: http://www2.ati.com/developer/gdc/D3DTutorial10_Half-Life2_Shading.pdf

This considers the direction in which light is coming and applies normal mapping in runtime on top of the lightmapping, which improves quite considerably the results, besides enabling us to use specular highlights aswell. Still need to think if this is worth implementing, since I’m not sure about lightmaps anyway… Since I have my own renderer, I can change it suit me…

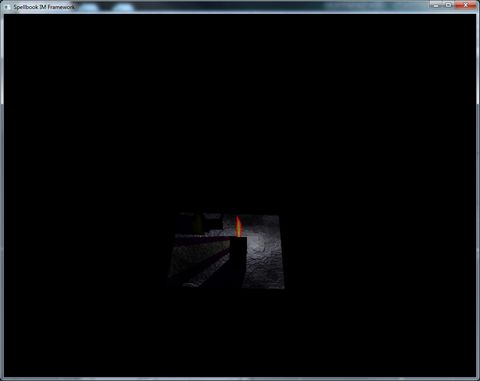

The worst problems came with the dynamic objects… Casting shadows on dynamic objects is simple enough, just use the standard shadowmap technique… Problem is that dynamic objects cast shadows on static (lightmapped) objects, and this is where things become complicated…

The way I solved it was by rendering the static lights three times… First time is the generic lightmap pass, in which all static objects are rendered, and a stencil mask is created. 0 on the stencil buffer means that pixel comes from a dynamic object, 1 means its from a static object.

Second time it’s for the dynamic objects (stencil buffer=0), and the results are as expected (both the static objects and the dynamic objects cast shadow on the dynamic objects).

Third time it’s for the static objects (stencil buffer=1), and it requires a subtractive pass… Basically, for each pixel of static objects, I compute the light that would reach that point, check if the point is being shadowed and subtract that light from what’s in the render buffer. Problem with this approach is that the lightmapped shadows will be twice as dark, since they’re already present in the shadowmap and the value will be subtracted.

To fix this, I had to find a trick, which almost does it… Basically, when rendering the shadowmap, I render the pixels in the [0..1] range if the object is static, and in the [1..2] range if the object is dynamic. Then, when I’m computing the subtraction, I only account for the dynamic objects (since the shadows cast by static objects are already accounted for in the lightmap). As I said, this almost works, with two caveats:

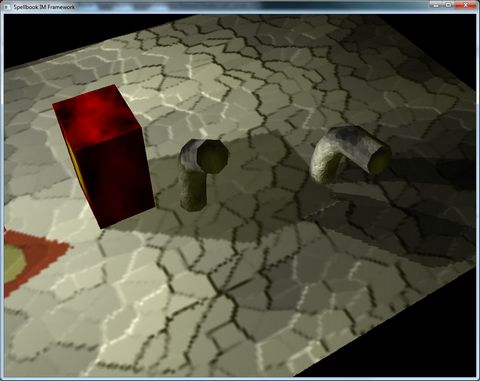

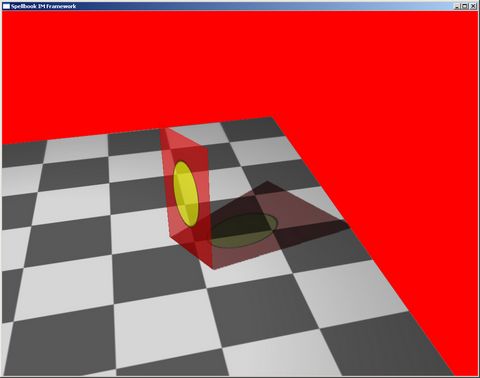

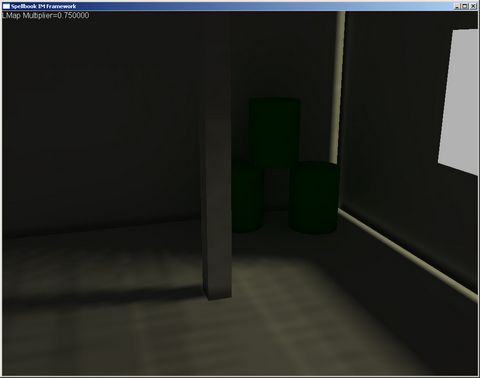

In this screenshot, you can see the "ragged edge" between the static-cast shadow of the block, and the dynamic-cast shadow of the skinned cylinder… This is due to the resolution of the shadow map, and it’s extremely hard to get rid of, without raising the shadowmap’s resolution to proibitive values… I think we might be able to solve this by multi-sampling the shadowmap, but that would required more cycles, and for the game we’re working on, I don’t think these small mistakes will matter…

A slightly worse problem:

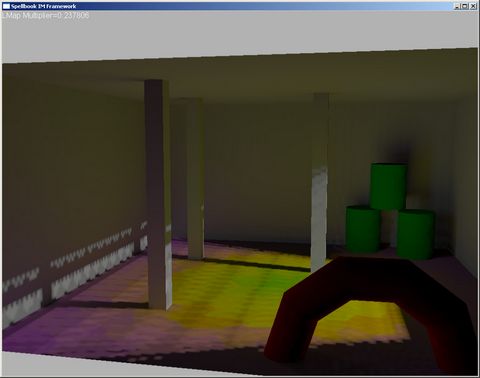

The shadow from the cylinder in this case subtracts light to the block-cast shadow, which shouldn’t happen… This is due to the fact that the computed shadowmap only "sees" the cylinder, and the block behind, so the system doesn’t know that the block is also casting a shadow at that pixel… I can think of a couple of ways to solve this (two different shadowmaps for static and dynamic objects, for example), but most of them just consume more GPU/memory/etc, and for a small gain…

Finally, the biggest problem: due to the limited precision of the render buffer (8-bit per channel), if we have multiple lights illuminating a place and casting a shadow on that place, the shadows will become darker than they should… Reason for this is the following:

Imagine 2 lights, illuminating a certain pixel… Light A casts 80% light, light B casts 60% light… Summed together, this is 140% light, which gets clamped on the buffer to 100%. Then, we want a dynamic object casting a shadow on that spot (because it blocks light B). Because of the current algorithm, light B is subtracted to the frame buffer, which makes the intensity there go to 40%, instead of the 80% it should…

This could be fixed with HDR buffers (which wouldn’t be so hard to add), but by this time I’m under the feeling that I’m just fixing one problem after another, without having a complete lighting solution, or worst, having a complete lighting solution that requires too much tweaking…

So now I’m thinking that I may ditch all of this and go for a full dynamic lighted system. Of course, I can’t have 100 lights casting shadows, etc, but I think I can reduce this substantially by making lights that aren’t near the player use less resolution and updating less frequently, and go around interpolating the lights to get the correct results… Now I just need a working scenario so I can see the impact of this, with some characters moving around and some real geometry to check perfomance…

Anyway, this isn’t a complete lost… worst case scenario, I’ll be able to use this system to create ambient occlusion maps to get a bit more realistic look without having to resort to SSAO (which I only liked in Crysis)…

Unfortunately, for the next foreseeable days, I’ll be busy with hard work stuff, which means no energy in my free time to work this…

Stay tuned for more adventures with lighting pipelines! 🙂