Finally some of the work insanity has died down, and as so I can go back to do some cool stuff on my spare time again…

After the basis of the renderer for our next project is done, I still feel there’s loads of stuff to be done in the environment lighting… since it is unfeasible to have shadowmaps in all visible lights (still have to determine a good algorithm to select lights to be shadowmapped in real time),I’ve decided to add lightmaps to the environment, and in the process (since it’s easy to add), also add pre-rendered ambient occlusion maps.

I’ve worked on lightmap generators in the past.

First of my experiments were with direct lighting lightmaps, which only account for the light that shines directly in objects. I built my own UV-map generator, which didn’t work that badly, but it was slow and some of the results were terrible… The advantage of that renderer was that I could add stained-window effects (through alpha maps) and other nifty effects to the resulting lightmap…

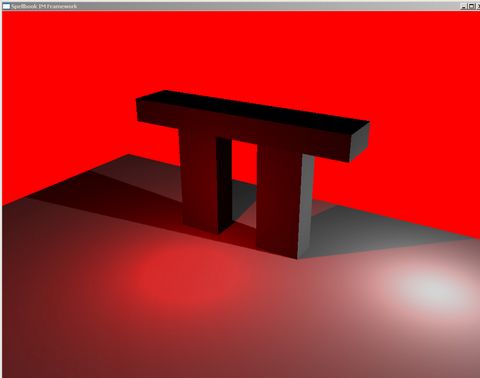

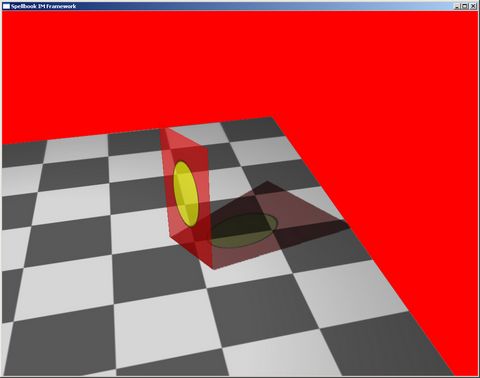

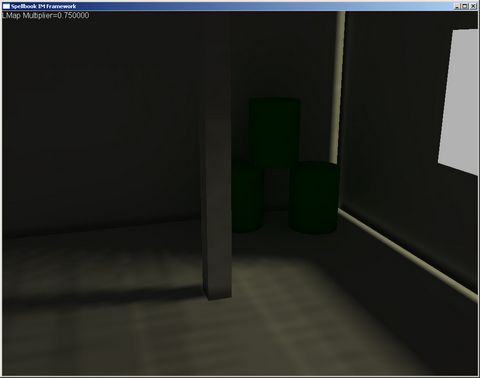

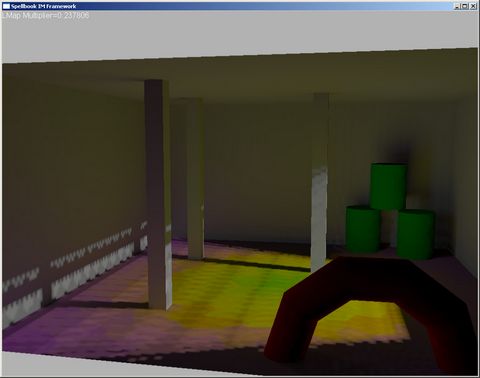

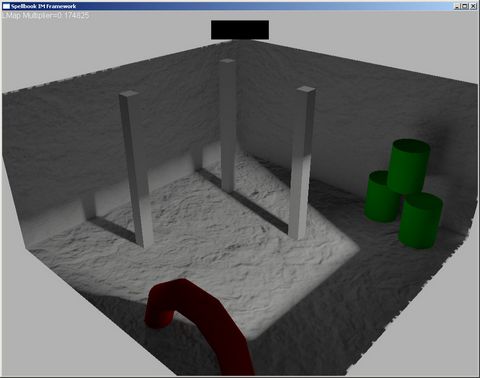

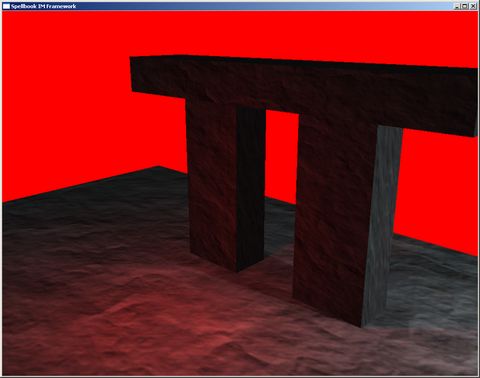

Some pictures of the obtained results (over 3 years ago):

Two sources of light, one red and one white, no textures.

Same as above, but textured, which makes everything look infinitely better, since it disguises the imperfections of the limited resolution of the shadowmap.

An alpha object that blocks part of the light and tints it.

An alpha object that blocks part of the light and tints it.

Again an alpha object, but with a textured alpha-map… The effect is quite nice.

Although the results I obtained with this were nice enough, I was never too happy with the UV-generation and the lack of soft-shadows, so a couple of years ago, I did some experiments with hardware-accelerated radiosity.

I replaced my UV-generator with the one that DirectX 3DX library has, and used the normal HW-accelerated radiosity algorithm (render scene from the point of view of the patch, average results, rinse and repeat).

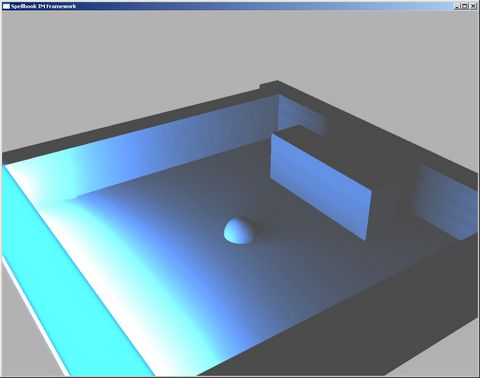

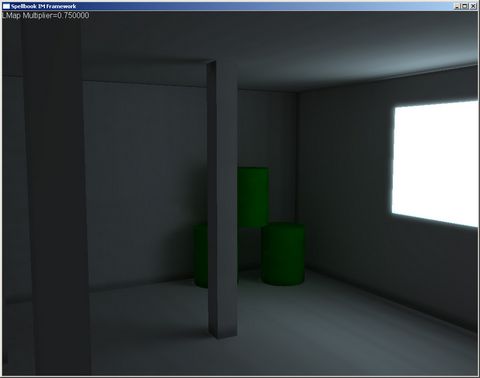

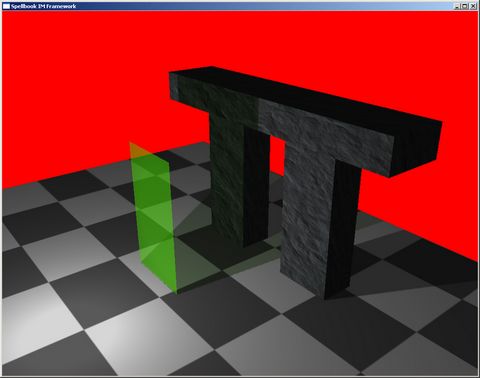

While the results weren’t terrible, it demanded too much tweaking to get good results:

The wall is an emissive light source.

After 6 iterations, the shadows become more “real” and you can see some color blending near the green “barrels”, which is pretty neat. The window is a glowing polygon, to simulate intense outside light.

Using a light that’s not white, and a spherical light source (with volume), that’s not visible from the whole room. After 6 iterations, the scene looks warmer, but it has too many artifacts on the floor (sampling artifacts), and in the near wall (because the wall was too close to the patch, it wouldn’t get drawn in the buffer, due to the near plane. This would lead to that silly illumination).

Using a colored floor (at a much lower resolution that the previous tests), the scene again becomes warmer and indirectly lit.

I supported normal maps in the rendering, and the result is quite nice, disguising some artifacts behind the more complex lighting.

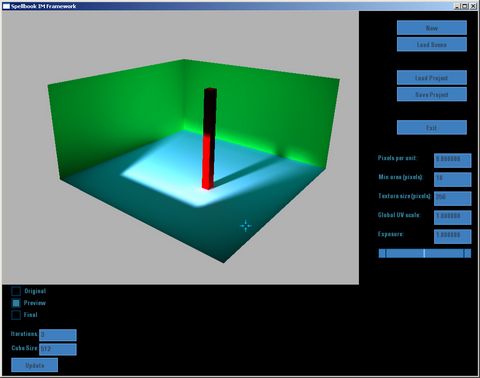

I even did an application that would enable me to change the reflectivity parameters:

But it was too much work getting this to work properly, too many small tweaks and too much time to actually see results… This is when I abandoned this project and moved on to other stuff (I know, I lack focus sometimes).

Anyway, back to the present, I decided to pick this up again… For the current game, I think radiosity is overkill, but I intend to divide the generation of lightmaps in two parts, so I can replace the direct lighting for radiosity later:

- Preprocessor

- Lighting engine

Basically, the preprocessor will take care of all the tasks that are common to any lightmap processor:

- See what meshes are present in the scene

- Create UV-map per mesh (using as a metric for resolution the surface area of the mesh and an adjustable parameter)

- Create instances of objects that share the same mesh (effectively copying the mesh with the new UVs)

- Create an UV-atlas that aggregates UV-maps

- Load into RAM the textures used (diffuse map, alpha map, normal map, emissive, etc). This data needs to be in accessible memory since we’ll need to sample it

- Initialize the lighting engine (give it all the meshes, etc, so he can build acceleration structures for raycasts, etc)

- For all lumels in all the lightmaps, call the light evaluation function on the lighting engine. This will probably be called multiple times for each lumel, taking into account the possible area of the lumel, so that we get anti-aliasing and reduce the artifacts caused by edge conditions. These samples will be averaged (either by standard average or some kind of distribution).

- This last step will be repeated a certain ammount of times, depending on the instructions of the lighting engine.

- If requested, build ambient occlusion map, by requesting the lighting engine an ambient occlusion factor. This will also take in consideration the area of the lumel, like in step 7.

- Save lightmaps generated

The lighting engine for now will be a simple Direct Light system. I want to take in consideration the following things:

- Take into account diffuse, normal and emissive maps

- An octree will be generated with all the world geometry for faster raycasting

- Raycasting will take in account the possible intersection of alpha/tinted objects and do the correct coloring to account for that

- Ambient occlusion will be computed by raycasting in an hemisphere around the lumel being computed, and considering what amount of rays gets intersected.

This system should also consider multi-processor usage (for direct light at least, since the HW-accelerated radiosity can’t take advantage of multiple processors, since there’s just one GPU).

Most of this stuff I already know how to do, since I have in done in previous projects… the exception is the octree generation (I usually use loose octrees, so there’s loads of code for splitting triangles, etc, that has to be done), and the part where I consider the area of the lumel for smoothing the lightmap.

Rendering the lightmaps in the deferred rendering pipeline will require an additional passage, since I don’t have any space left on the G-Buffer for three components (RGB, since I want colored lightmaps), but that’s ok, since it will enable me to do fill up the stencil buffer to avoid drawing the static lights onto the static geometry again, while I still have the possibility of using the static lights on the dynamic geometry. This will also enable me to light the environment with dynamic lights (in static and dynamic geometry) without wasting too much performance. Adding the concept of light volumes to both types of lights will also be easier this way, since I can filter out what parts of the scene shouldn’t be affected. The ambient occlusion term can be stored with the ambient component already in the G-Buffer, so that shouldn’t have a big performance hit.

All the lights and geometry that are present in the input scene will be considered static… Bad part of this is that opening doors won’t have the dramatic/cool effect I would like, unless I use dynamic lights and shadowmaps at the correct circumstance.

Anyway, I’ll hopefully have something working by next week (hopefully in time for the Screenshot Saturday thingie which I enjoy seeing every week).

Ah, just recalled… why do my own lightmap generator, instead of using an existing (free) one? Well, I never found one that produced nice results while making the trouble of writing an importer/exporter wasn’t a nightmare, to be honest… I feel that this is the kind of thing that’s kind of linked with your pipeline and your own way of doing stuff… besides, this is fun code! 🙂

So, wish me luck!